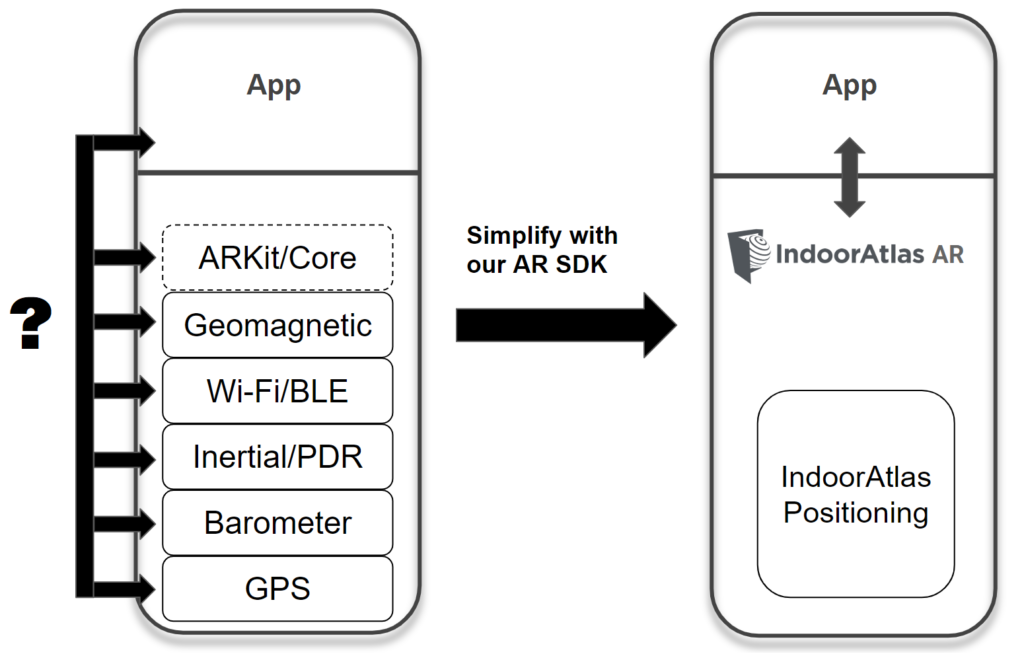

Over the last few years we have seen several partner companies take on the challenge of creating engaging customer experiences by combining AR and IndoorAtlas’ geomagnetic-fused indoor positioning. While on the surface this appears to be a simple 1+1 combination of using the ARKit/ARCore from iOS and Android and getting accurate device positioning with IndoorAtlas SDK, the truth is that such endeavors burn resources and do not always produce good results.

Some of the main challenges are combining centimeter accurate visual inertial odometry (VIO) from ARKit/ARCore with meter-scale errors of indoor positioning and the math required to transform between coordinate systems to compute AR object locations so that they don’t appear jumpy on the screen. The new IndoorAtlas SDK solves these challenges by including built-in support for ARKit/ARCore VIO and optimally fusing it together with our industry leading indoor positioning. All this is done under the hood, removing the need for the developer to worry about complicated math and coordinate transformations. The early adopters of our new AR SDK have all expressed the same: pushing aside the complexity of combining indoor positioning from one vendor with an AR solution from another is one of the reasons that makes IndoorAtlas AR great!

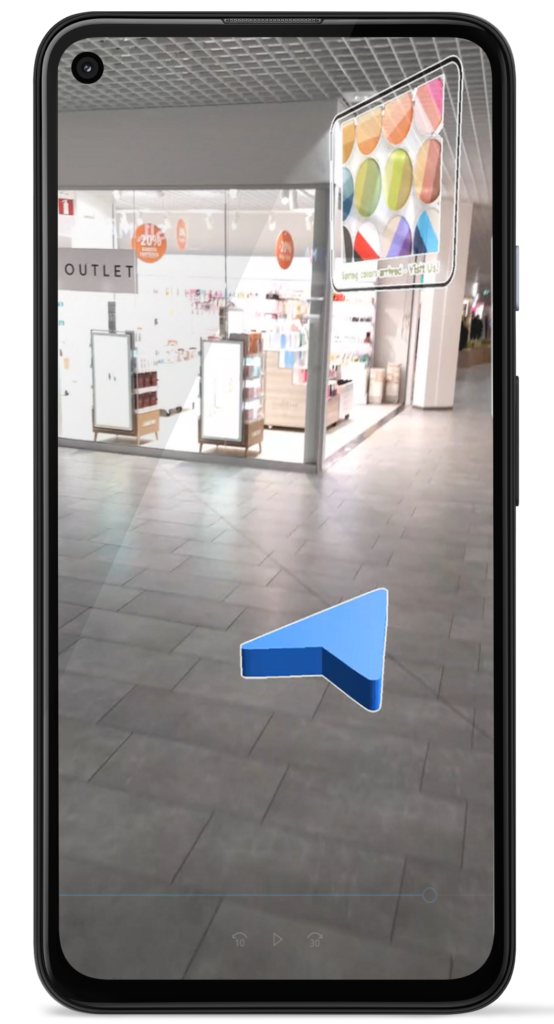

IndoorAtlas Example AR App with Wayfinding arrow and 2D POI

In the past, many positioning technologies have been combined with ARKit/ARCore to implement indoor wayfinding. Visual markers give the exact location and orientation of the device when the marker is scanned, but soon after walking long enough distance from the marker, a new marker needs to be scanned or the position estimate drifts, ruining the navigation experience. BLE beacons and WiFi can continuously give approximate user position, but positioning accuracy is too low and jumpy to create enjoyable AR wayfinding experience. UWB gives good positioning accuracy, but requires line of sight to the device and expensive hardware installation. Finally, combining any of those with VIO tracking is far from easy outside the lab and this easily becomes prohibitive for real size deployments.

As described above, both the core IndoorAtlas positioning SDK and Augmented Reality frameworks (ARCore/ARKit) track the movement of a mobile device. However, they focus on different things:

- ARCore and ARKit track the motion accurately in a local metric coordinate system. They can tell you very accurately that the phone moved 3 centimeters to the left and 4 centimeters up, compared to where it was a 0.3 seconds ago, but the starting position is arbitrary. They do not tell you if the phone is in Finland or in the US. Also, like all inertial based tracking systems, they need regular position fixes to control the drift caused by accumulation of small errors.

- IndoorAtlas technology determines the global position and orientation of the device. The SDK tells you your floor level and the position within a floor plan with meter-level accuracy (depending on the case). However, up until now, the location updates approximately once a second and does not follow centimeter-level changes.

The new IndoorAtlas AR fusion API merges these two sources of information: You can both track centimeter level changes and know where the device is in the global coordinates. This does not mean that we have global centimeter-level accuracy, but it allows you to conveniently use geo-referenced content so that it looks pleasant in the AR world. It also works indoors where using the digital compass directly for orientation detection is challenging due to calibration issues and local geomagnetic fields – the same phenomenon we embrace in IndoorAtlas geomagnetic-fused positioning. Importantly, like all good APIs, the IndoorAtlas AR SDK hides the complexity from the developer. The image below illustrates this:

One great benefit of the IndoorAtlas AR SDK is that IndoorAtlas positioning has been in production use for several years with millions of users and has been deployed to some of the largest venues in the world. The benefits of the AR SDK include:

- Same easy-to-use deployment workflow as with indoor positioning, no extra steps!

- Makes it easy to place geo-referenced content, such as POIs, to the AR world.

- Built-in AR Wayfinding.

- Integrating the VIO tracking from ARCore/ARKit to the IndoorAtlas positioning.

- Enables presenting AR objects in a visually pleasing manner during navigation.

- Lets the developer use any type of 3D models and rendering toolkit.

- AR SDK is available for Unity as well as native Android and iOS.

A novelty in IndoorAtlas AR SDK is that the platform-provided AR motion tracking (VIO) is deeply integrated into the IndoorAtlas’ positioning sensor fusion framework, which allows us to provide the robustness and accuracy of the sensor fusion algorithm. When the phone is placed e.g. in a bag or pocket occluding the camera and disabling AR and VIO, the indoor positioning still continues with the background mode of IndoorAtlas SDK (added in SDK 3.2). When the AR session is resumed, AR wayfinding can instantly continue from the right location.

The video below shows an example where POIs in IndoorAtlas CMS are visualized as AR advertisements. The mall in the video was fingerprinted and POIs and wayfinding were created in an hour demonstrating the scalability of IndoorAtlas technology.

IndoorAtlas AR is available for free evaluation right now. To test it, you need to have/create IndoorAtlas account and a fingerprinted venue. Test with:

- IndoorAtlas Positioning iOS app

- IndoorAtlas Showcase App on Android (contact IndoorAtlas)

- Programming your own app using the AR SDK: contact IndoorAtlas sales & support.

API documentation for the new AR fusion API can be found

If you have any questions or would like to discuss with us about the new AR features, please contact IndoorAtlas sales & support.